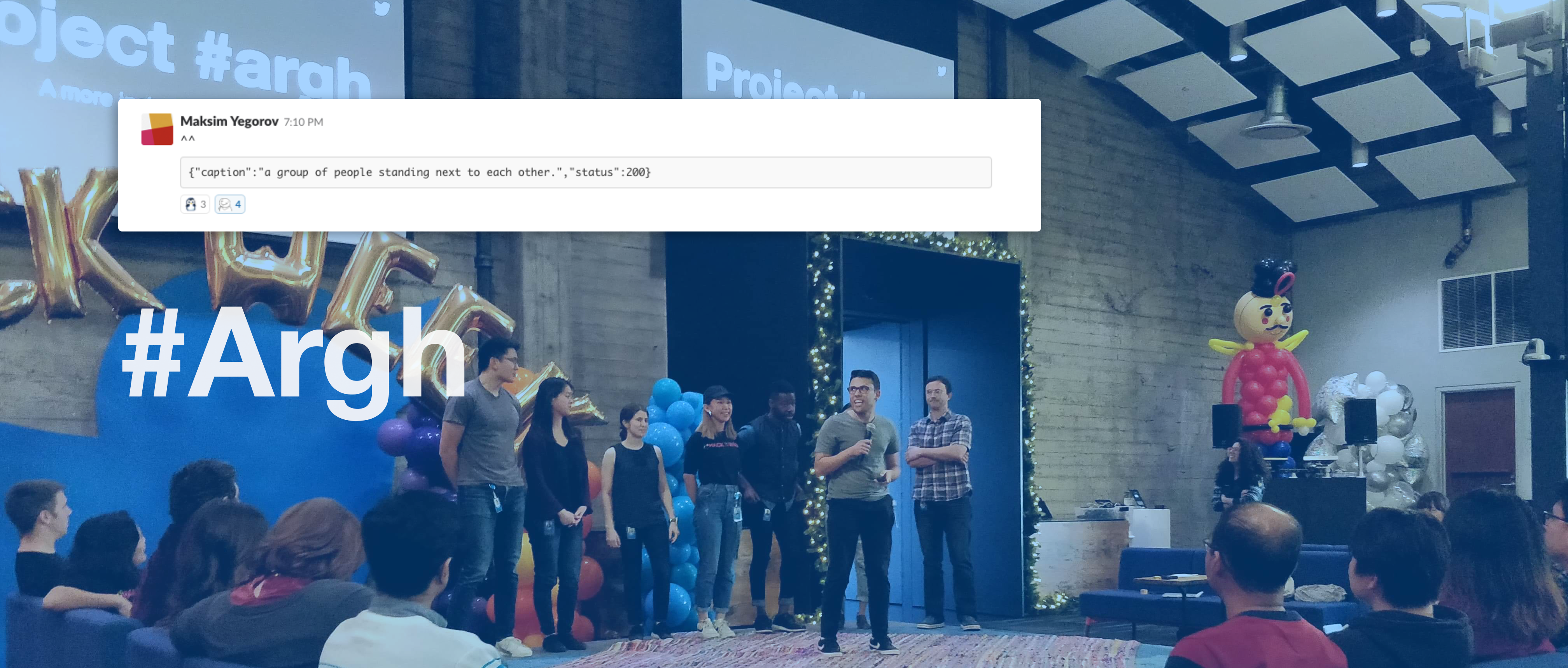

🥳#Argh won the Judges Award in Twitter 2018 #hackweek! 🙌🙌🙌 So... what is Argh anyway?

As we know, millions of images are tweeted on Twitter every day, but not everyone can see them 👀. Imagine if almost every image on Twitter was a black square. That's the Twitter experience for visually impaired users who use screenreaders. We're leveraging computer vision to make the public conversation more inclusive. As a bonus, this work can improve search results, ranking, and more.

If you are interested in this project, please contact me for more details.

As a product designer, I cooperate with other team members to bring ideas and solutions.

I prototype product demo and help our team to run usability tests.

I work with our team to reconstruct the information architecture and redesign user journey.

We work together and build an efficient tool to help visually impaired users to

better understand the content of the images without seeing them.

We also share ideas and discover potential posibilities to benefit Twitter with our new design.Meet our awesome team! 🙌🙌🙌

Developers, designers and PMs cooperate to make this happen: team work makes dream work!

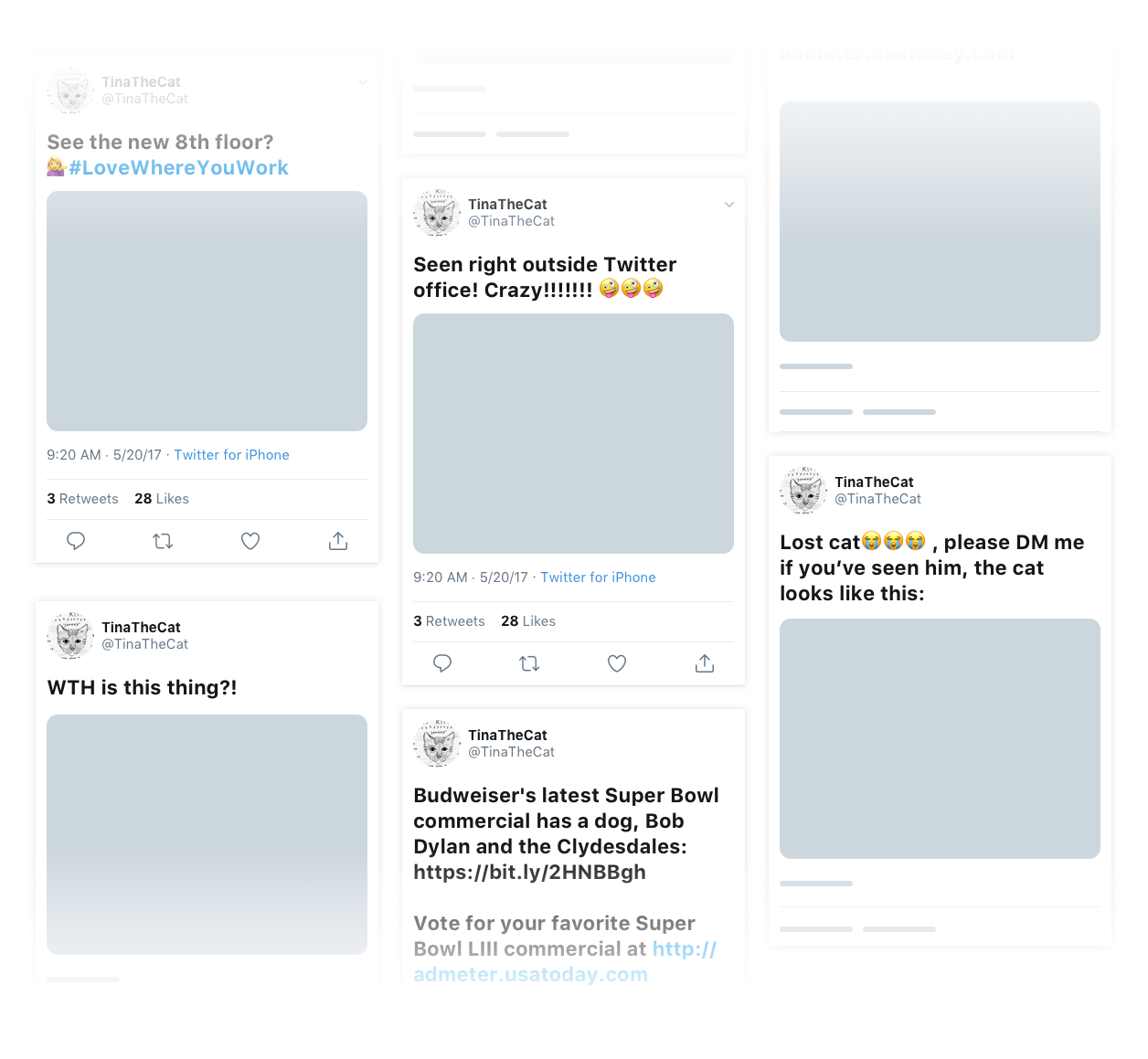

Imagine a world in which you can only “see” Tweets like these. There are over 39 Million blind people in the world, which is more than the population of Canada or California. Blind users are deprived of their ability to see and know the world. As one of the most popular social media platforms in the world, what could we do to make the world a little better for those people?

💻Machine learning + 📝description + 🧠imagination = the picture of what's going on in this beautiful world🌎!

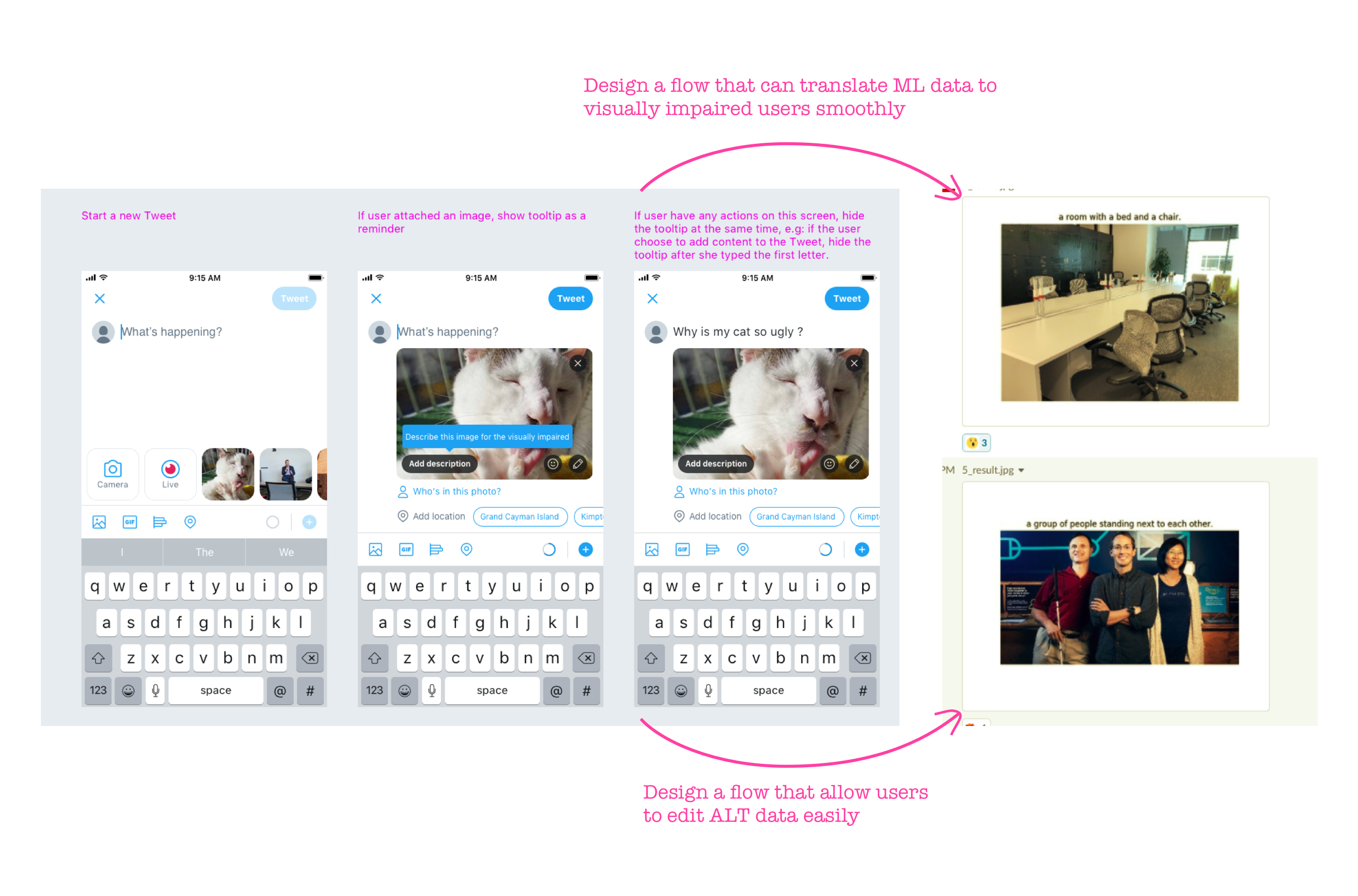

1. We updated iOS client to show visible image descriptions and include an image description reminder. 2. We uploaded images after Tweeting to an OCR service based on TesserOCR hosted on our own box. 3. If no text was detected, upload to Aurora service which captions images. 4. Image description is now available and blind users will be able to hear the description as they scroll through their timeline. 5. Allow the original Tweeter to see the caption and offer them an entrypoint to edit.

From the UX point of view, I also designed better flows for both visually impaired users, and users who are willing to help add more accurate ALT descriptions. It will help image Tweets get more humanized descriptions and gain more personalities from real human beings, besides having automatically captured text from images. And our ML model will also learn from these human-generated descriptions. The model will constantly learn, improve, and better emulate human language.

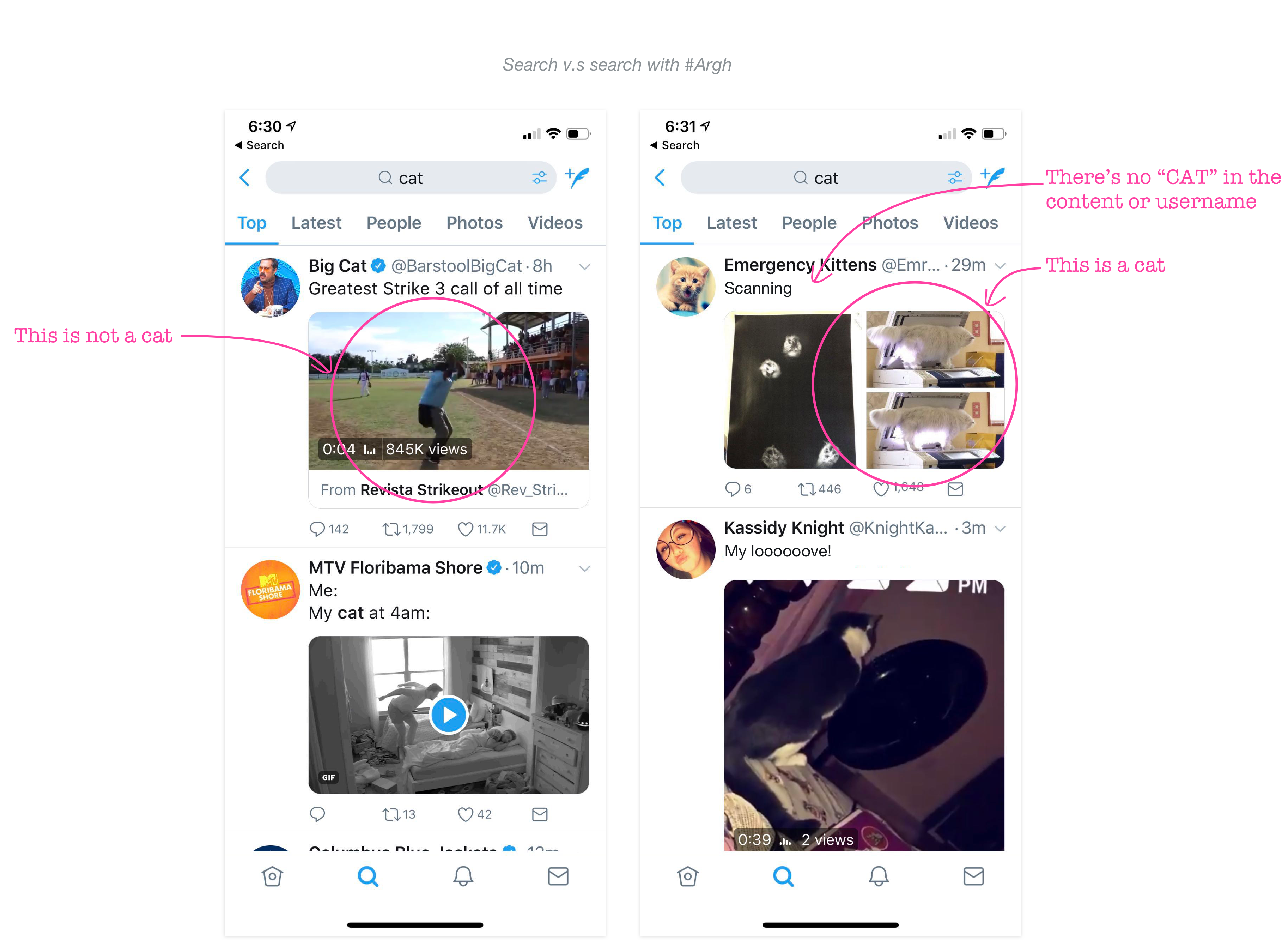

Argh benefits not only blind users, but also all the users on Twitter. For example, the current Twitter search engine (Tweetypie) can pass metadata to search indexing. But sometimes it's hard to find the right content for users, even if the Tweets contain images that are related to the searched keywords. The reason is that the image won't show up if the tweets don't include the exact words the user is searching for. But with Argh, users can get better results at finding the right content.

"I want to see more Tweets about "cat"!" 🐈🐱😹🐾🐾🐾